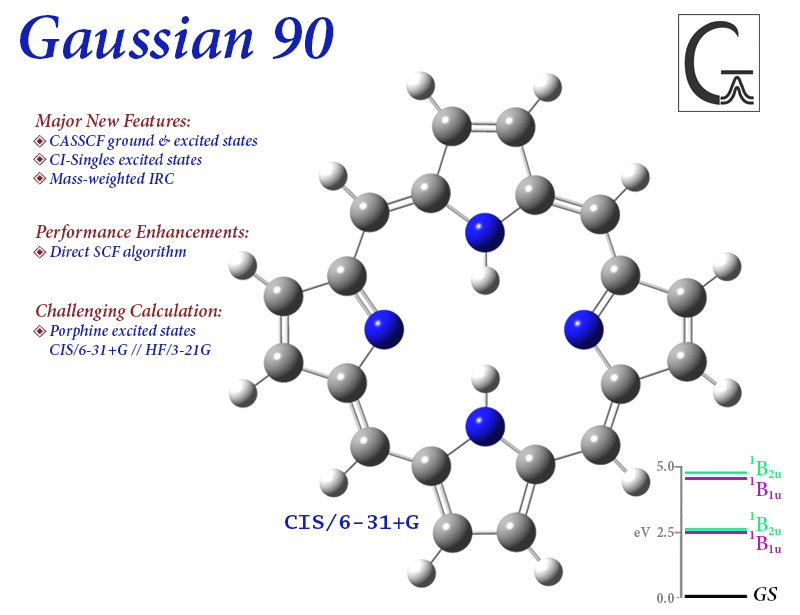

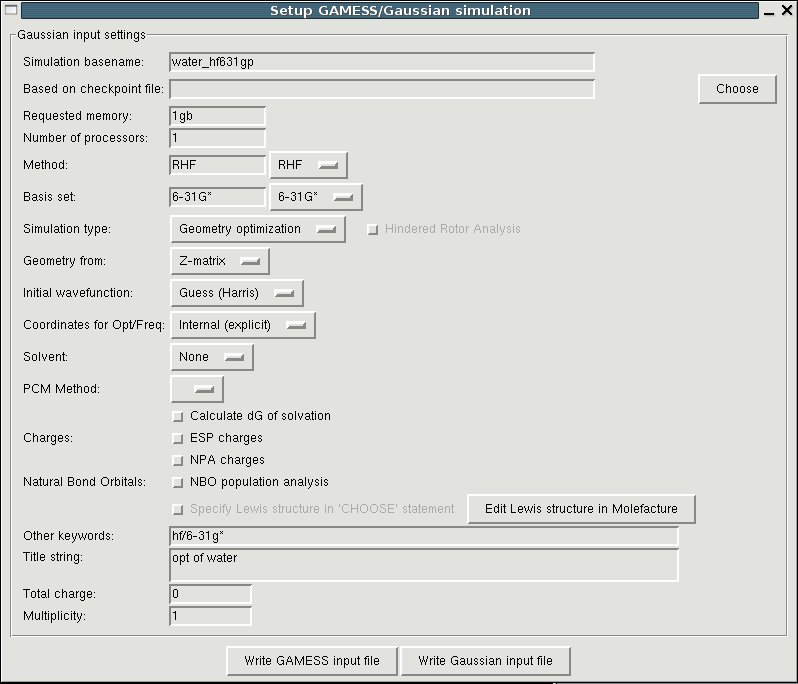

By incorporating volume constraints, the optimized design achieves an equivalently high performance but significantly reduces the amount of material usage. Our method shows superior performance compared to the state-of-the-art approaches. The methodology is demonstrated by an example of wavelength demultiplexer. To enable a clear binarized design, a dynamic growth mechanism is imposed on the projection strength in parameterization. It is released in 1970 by John Pople and his research group at. It utilizes fundamental laws of quantum mechanics to predict energies, molecular structures, spectroscopic data (NMR, IR, UV, etc) and much more advanced calculations.

Gaussian software optimization series#

A series of adaptive strategies for smoothing radius and learning rate updating are developed to improve the computational efficiency and robustness. Gaussian is a computer program used by chemists, chemical engineers, biochemists, physicists and other scientists.

The proposed framework has its advantages in portability and flexibility to naturally incorporate the parameterization, physics simulation, and objective formulation together to build up an effective inverse design workflow. In this work, we propose to extend the DGS approach to the constrained inverse design framework in order to find a better design. However, the current DGS method is designed for unbounded and unconstrained optimization problems, making it inapplicable to real-world engineering design optimization problems where the tuning parameters are often bounded and the loss function is usually constrained by physical processes.

The problem with these packages is that the choice of correlation function is restricted. For example there is BACCO that offers some calibration techniques, mlegp and tgp focusing on treed models and parameter estimation and GPML for Gaussian process classification and regression. Promising results show that replacing the traditional local gradient with the nonlocal DGS gradient can significantly improve the performance of gradient-based methods in optimizing highly multi-modal loss functions. Many Gaussian process packages are available in R. A directional Gaussian smoothing (DGS) approach was proposed in our recent work and used to define a truly nonlocal gradient, referred to as the DGS gradient, in order to enable nonlocal exploration in high-dimensional black-box optimization.

Local-gradient-based optimization approaches lack nonlocal exploration ability required for escaping from local minima in non-convex landscapes.

0 kommentar(er)

0 kommentar(er)